Cybersecurity researchers at Wiz uncovered five security flaws, collectively tracked as SAPwned, in the cloud-based platform. An attacker can exploit the flaws to obtain access tokens and customer data.

SAP AI Core, developed by SAP, is a cloud-based platform providing the essential infrastructure and tools for constructing, managing, and deploying predictive AI workflows.

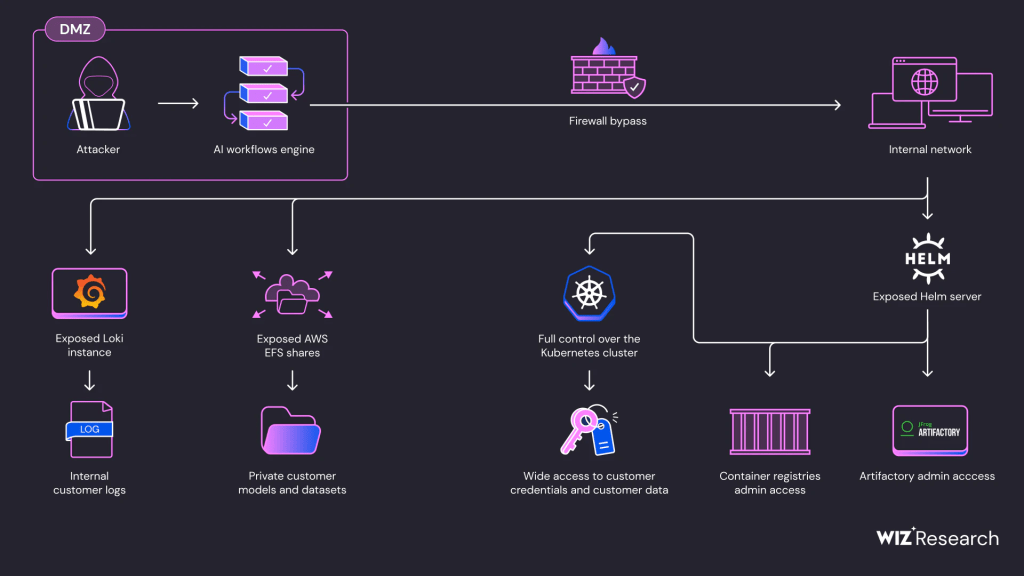

The researchers focused on the security risks associated with AI training services requiring access to sensitive customer data. The security firm discovered that by executing legitimate AI training procedures and arbitrary code, they could gain extensive access to customers’ private data and credentials across various cloud services. The researchers demonstrated that they could read and modify Docker images, artifacts, and gain administrator privileges on SAP’s Kubernetes cluster. These vulnerabilities potentially allowed attackers to access and contaminate customer environments and related services.

“Our research into SAP AI Core began through executing legitimate AI training procedures using SAP’s infrastructure. By executing arbitrary code, we were able move laterally and take over the service – gaining access to customers’ private files, along with credentials to customers’ cloud environments: AWS, Azure, SAP HANA Cloud, and more.” reads the report published by Wiz. “The vulnerabilities we found could have allowed attackers to access customers’ data and contaminate internal artifacts – spreading to related services and other customers’ environments.”

The researchers explained that they could run malicious AI models and training procedures and urge the industry to improve its isolation and sandboxing standards when running AI models.

Wiz reported the flaws to SAP on January 25, 2024, and the company fixed them by May 15, 2024.

“Researchers also highlighted that threat actors could gain cluster administrator privileges on SAP AI Core’s Kubernetes cluster by exploiting the exposed Helm package manager server. Once attackers have obtained this access, they can steal sensitive data from other customers’ Pods, interfere with AI data, and manipulate models’ inference.” concludes the report. “Additionally, attackers could create AI applications to bypass network restrictions and exploit AWS Elastic File System (EFS) misconfigurations, obtaining AWS tokens and accessing customer code and training datasets.”

Follow me on Twitter: and and Mastodon

(SecurityAffairs – hacking, SAP AI Core)